High Performance Computing on AWS

I ran a project to deploy an HPC cluster using on-demand AWS Elastic Compute Cloud (EC2) resources. The HPC cluster provides researchers with compute resource to quickly run mathematical simulations across very large datasets. This deployment was a replacement for aging on premises HPC hardware and an opportunity to trial Amazon AWS in a hybrid cloud configuration. High security implementation:

- One way firewall rules between company network and AWS (company connects out to AWS resources, AWS resources can’t connect in)

- Encryption of data in transit and at rest

- AWS Direct Connect connecting company to AWS. VPN over direct connect.

- AWS Identity and Access Management (IAM)

- All authentication via AD Federation Services (ADFS) with AWS STS generating temporary AWS API keys as required. No IAM accounts or long term AWS API keys. Accessing the AWS console or AWS API is prevented without access to our internal ADFS server

- All authorisation via IAM Role policies with least rights privilege

- Multi factor authentication (MDA) for root account. Token held by senior management.

- AWS CloudWatch and AWS Config for auditing and change notification. Deployed across all AWS regions

- AWS costs monitored by a cloud service

- Unusual activity (e.g. IAM policy changes) generates alerts that must be acknowledged

- Security configuration audited by a specialist consultancy firm

High levels of automation:

- AWS Infrastructure: VPC, subnets, security groups, routes, network access control all managed via AWS CloudFormation template

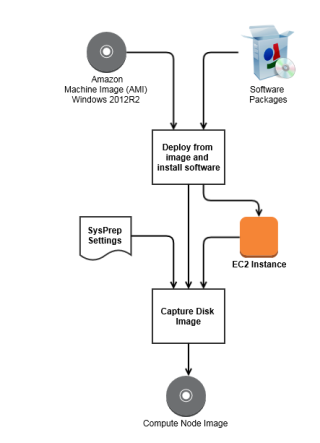

- ‘AMI Bakery’ service to auto generate disk images monthly with latest software updates. Injecting steps into AWS sysprep file to join AD domain during first boot

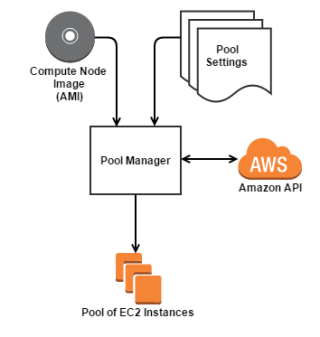

- ‘Pool Manager’ service to deploy and maintain a pool of 64 EC2 instances, performing rolling replacement when new disk images are available

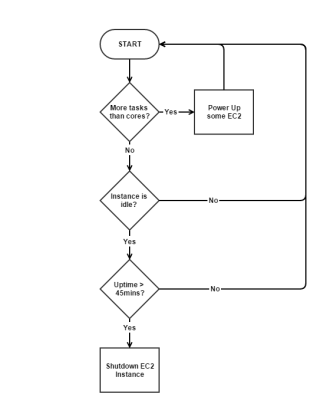

- ‘Auto Grow’ service to power-up compute nodes when there are grid computing jobs in the scheduler queue. Power them down if there are no more tasks and they’re near the end of an AWS hourly billing cycle

- All scheduled and adhoc tasks scripted in PowerShell

- HPC scheduler and compute node software packaged for unattended installation

- Deployed two HPC services. Firstly Microsoft HPC Pack 2012R2 and later switched to Mathworks MATLAB Job Scheduler

Gained AWS Architect Associate certification and presented at AWS User Group UK Meetup. I got in touch with the AWS .Net SDK team in the US and briefed them on how we’d implemented AD authentication to AWS API via PowerShell, SAML and ADFS. The AWS .Net SDK team rolled ADFS support into a recent SDK release.

AMI Bakery Service

Update: Before Hashicorp Packer was a thing we rolled our own using PowerShell. Now days use packer! New disk images are created monthly with the latest Microsoft updates.

Pool Manager Service

This service spins up a bunch of compute nodes based on the compute node disk image from AMI Bakery. It also terminates nodes that are older than the latest AMI, which are automatically replaced. Cloning new machines from AMI took 10 minutes on AWS, so we maintain a pool of pre-deployed machines that are quicker to power up on demand.

Autogrow service

The AWS autoscaling service is not aware of the HPC scheduler application, and it only tests metrics once every 5 minutes. Also we wanted to keep machines running once they were up (and paid for) until they near the end of a compute hour. Per-minute billing would be a better fit for this kind of application. So a service was created to monitor the scheduler job queue and manage the power state of a pool of machines.